When I started covid.observer about seven months ago, I thought there would be no need to update it after about 3-4 months. In reality, we are approaching to the end of the year, and I will have to fix the graphs which display data per week, as the week numbers will very soon make a loop.

All this time, more data arrived, and I also made it even more by adding a separate statistics for the regions of Russia, with its 85 subdivisions, which brought the total count of countries and regions up to almost 400.

mysql> select count(distinct cc) from totals; +--------------------+ | count(distinct cc) | +--------------------+ | 392 | +--------------------+ 1 row in set (0.00 sec)

Due to frequent updates that changes data in the past, it is not that easy to make incremental update of statistics, and again, I did not expect that I’ll run the site for so long.

mysql> select count(distinct date) from daily_totals; +----------------------+ | count(distinct date) | +----------------------+ | 329 | +----------------------+ 1 row in set (0.00 sec)

The bottom line is that daily generation became clumsy and not smooth. Before summer, the whole website could be regenerated in less than 15 minutes, but now it turned to 40-50 minutes. And I tend to do it twice a day, as a fresh portion of today’s Russian data arrives a few hours after we’ve got a daily update by the Johns Hopkins University (for yesterday’s stats).

But the most scary signals began after the program started crashing with quite unpleasant errors.

Latest JHU data on 12/12/20 Latest RU data on 12/13/20 Generating impact timeline... Generating world data... MoarVM panic: Unable to initialize event loop

Failed to open file /Users/ash/Projects/covid.observer/COVID-19/csse_covid_19_data/csse_covid_19_daily_reports_us/12-10-2020.csv: Too many open files

Generating impact timeline... Generating world data... Not enough positional arguments; needed at least 4 in sub per-capita-data at /Users/ash/Projects/covid.observer/lib/CovidObserver/Statistics.rakumod (CovidObserver::Statistics) line 1906

The errors were not consistent, and I managed to re-run the program by pieces to get the update. But none of the errors were easily explainable.

MoarVM panic gives no explanation, but it completely disappears if I run the program in two parts:

$ ./covid.raku fetch $ ./covid.raku generate

instead of a combined run that both fetches the data and generates the statistics:

$ ./covid.raku update

The Too many open files is a strange one as while I process the files in loops, I do not intentionally keep them open. But that error seems to be solved by changing system settings:

$ ulimit -n 10000

The final error, Not enough positional arguments; needed at least 4, is the weirdest. Such thing happens when you call a function that expects a different number of arguments. That never occurred for months after all bugs were found and fixed. It can only be explained by the new piece of data. Indeed, it may happen that some data is missing, but I believe I already found all the cases where I need to provide the function calls with default zero values.

Having all that, and the fact that the program run takes dozens of minutes before you can catch an error, it was quite frustrating.

And here comes Liz!

She proposed to look into the things and actually spent the whole day by first installing the code and all its requirements and then by actually doing that job to run, debug, and re-run. By the end of the day she created a pull request, which made the program twice as fast!

Let’s look at the changes. There are three of them (but no, they do not directly answer the above-mentioned three error messages).

The first two changes introduce parallel processing of countries (remember, there are about 400 of what is considered a unique $cc in the program).

my %country-stats = get-known-countries<>.race(:1batch,:8degree).map: -> $cc {

$cc => generate-country-stats($cc, %CO, :%mortality, :%crude, :$skip-excel)

}

Calling .race on the result of get-known-countries() function improves the previously sequential processing of countries. Indeed, their stats are computed independently, so there’s no reason for one country to wait for another. The parameters of race, the batch size and the number of workers, can probably be tuned to fit your hardware.

The second change is similar, but for another part of the code where the continents are processed in a loop:

for %continents.keys.race(:1batch,:8degree) -> $cont {

generate-continent-stats($cont, %CO, :$skip-excel);

}

Finally, the third change is to make some counters native integers instead of Raku Ints:

my int $c = $confirmed[$index] // 0; my int $f = $failed[$index] // 0; my int $r = $recovered[$index] // 0; my int $a = $active[$index] // 0;

I understand that this reduces both the memory and the processing time of these variables, but for some reason it also eliminated the error in counting function parameters.

And finally, I want to mention the <> thing that you may have noticed in the first code change. This is the so-called decontainerization operator. What it does is illustrated by this example from the documentation:

use JSON::Tiny;

my $config = from-json('{ "files": 3, "path": "/home/some-user/raku.pod6" }');

say $config.raku;

# OUTPUT: «${:files(3), :path("/home/some-user/raku.pod6")}»

my %config-hash = $config<>;

say %config-hash.raku;

# OUTPUT: «{:files(3), :path("/home/some-user/raku.pod6")}»

The $config variable is a scalar variable that keeps a hash. To work with it as with a hash, the variable is decontainerized as $config<>. This gives us a proper hash %config-hash.

I think that’s it for now. The main advantage of the above changes is that the program now needs less than 25 minutes to re-generate the whole site and it does not fail.

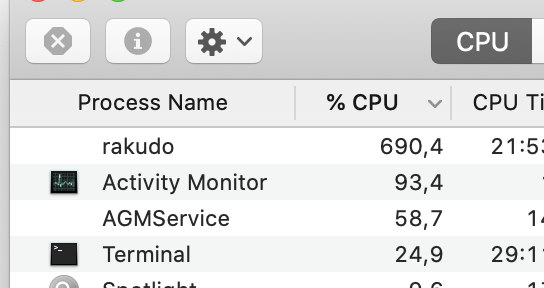

Well, but it became a bit louder too as Rakudo uses more cores 🙂

Thanks, Liz!